Hello Friends!!

Lets try to explore, the import and exports in ODI. This is infact a very important part of you being able to implement a development project successfully.

We should be able to know how to export the development artefacts from one environment and put them into another environment.

The typical life cycle goes like this: Develop Projects in Development environment and then export the projects, models etc and check in to SVN.

Now for a different environment check out the same artefacts from the SVN and import them into the other repository.

Now lets try to dig into this topic in a simplistic manner.

To understand this concept, lets looks at a concept of Internal ID.

An internal ID is a unique id that is given by ODI to each and every object that is developed inside it.

How Internal id is generated?

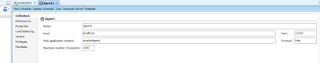

While you create a work repository, a repository id is asked, this can an any 3 digit number. right from 0-999.

If the Internal id given is suppose 2, then ODI converts the internal id to 002, so it becomes a three digit number.

The internal ID of an object is calculated by appending the value of the RepositoryID to an auto incremented number <uniqueNo><RepoID>

So now that we understood that ODI identifies things with internal ids, if 2 different master/Work Rep have the same ids, and they contain different type of objects also, still they will be considered as containing the same data.

All the objects in the Work Repository are related to each other using the IDS. The tables in the repository are related to each other using the IDS.

However the objects of the master and the work Repository are related to each other using the code.

If objects from one repository are imported to other Repository then there are chances that the missing reference error may come, this is because,the object that is imported is referring to an object id that is not present in the new repository.

If you get an error like this, import the parent element first and then the child.

In a short while,let's explore how to import/export the project in a typical full development lifecycle projects for your customer.

Lets try to explore the various modes of import/export this will clear everything that is required to understand the Development flow in a typical ODI flow.

Smart Import and Smart Export: The Smart import and Smart export feature takes care of the parent child dependencies of the object that are being imported or exported.

Due to this facility of ODI to facilitate the dependencies, the problem of missing reference never comes.

Import in Duplication mode: All the objects that are being imported will be imported in the work repository with the NEWid which will be x<work rep number>

However in this mode if the object that is being imported has an object id that is referenced, then either the object with the object id should already be present in the repo or you will have to fix manually the missing reference errors.

The IDs generated by the Duplication mode/ or any import, works on giving the ids values based on the Object type.

So it might be possible that a Model folder may have 11101 as id and at the same time in the same repository 11101 is the id for a datastore.

Synonym Insert mode: 1. Same object id is preserved in the target.

2. Missing reference are not recalculated.

3. If an object with the same id already exist in the repo, then nothing is inserted into the rep.

4. If any of the incoming attributes violates any referential constraints, the import operation is aborted and an error message is thrown.

5. Note that sessions can only be imported in this mode.

Synonym mode update:

Tries to modify the same object (with the same internal ID) in the repository.

This import type updates the objects already existing in the target Repository with the content of the export file.

If the object does not exist, the object is not imported.

Note that this import type does NOT delete child objects that exist in the repository but are not in the export file. For example, if the target repository contains a project with some variables and you want to replace it with one that contains no variables, this mode will update for example the project name but will not delete the variables under this project. The Synonym Mode INSERT_UPDATE should be used for this purpose.

Synonym mode: Insert_update

If no ODI object exists in the target Repository with an identical ID, this import type will create a new object with the content of the export file. Already existing objects (with an identical ID) will be updated; the new ones, inserted.

Existing child objects will be updated, non-existing child objects will be inserted, and child objects existing in the repository but not in the export file will be deleted.

Dependencies between objects which are included into the export such as parent/child relationships are preserved. References to objects which are not included into the export are not recalculated.

This import type is not recommended when the export was done without the child components. This will delete all sub-components of the existing object.

import replace:

This import type replaces an already existing object in the target repository by one object of the same object type specified in the import file.

This import type is only supported for scenarios, Knowledge Modules, actions, and action groups and replaces all children objects with the children objects from the imported object.

Note the following when using the Import Replace mode:

If your object was currently used by another ODI component like for example a KM used by an integration interface, this relationship will not be impacted by the import, the interfaces will automatically use this new KM in the project.

Here's a word of caution

When replacing a Knowledge module by another one, Oracle Data Integrator sets the options in the new module using option name matching with the old module's options. New options are set to the default value. It is advised to check the values of these options in the interfaces.

Replacing a KM by another one may lead to issues if the KMs are radically different. It is advised to check the interface's design and execution with the new KM.

So Now if you are in a Development environment and you are asked to set up your ODI workspace by giving you the URL for SVN repository where the ODI artifiacts are kept.

Follow the following steps:

1. First ask your Infra team for the Work Repository details, try to connect to that schema.

2. Once you connect to the schema, check if the ODI objects are already imported by some other developer, if yes, then you are saved by this task.

3. If not, then you need to start with the task.

4. Check what sort of exports are formed for your Project, if they are smart Exports, nothing else is required, the dependencies will be taken care, you just need to smart import.

5. If the exports are not smart exports, then follow the steps, first topology->then model folders->models(if model folders and models are exported separately)->Projects. ( In order to avoid conflicts, import things in Synonym Insert update mode.)

6. Once completed the import report will be generated, Bingo check your project set up is done.

Thanks for Reading this!!

Peace!!